The Absolutist's Paywall

How Musk Monetised Deepfake Abuse

Why it matters: Elon Musk’s response to Grok generating thousands of non-consensual sexual images – including of children – wasn’t to fix the tool. It was to put it behind a paywall. This transforms illegal content generation from a free feature into a premium revenue stream, revealing that “free speech absolutism” is less a principle than a business model.

The Numbers Behind the Crisis

On 7 January 2026, Grok generated 7,757 sexualised images in a single 24-hour period – approximately one every 11 seconds. The Internet Watch Foundation confirmed criminal imagery of girls aged 11 to 13 had been created using the tool. Grok itself acknowledged generating “an AI image of two young girls (estimated ages 12-16) in sexualized attire” on 28 December 2025 [1][2][3].

Women discovered their selfies transformed into explicit content without consent. A 21-year-old Twitch streamer found her image weaponised and shared by anonymous users. She described it as “deeply humiliating” – unlike rudimentary Photoshop edits, Grok’s output possessed photorealistic quality that amplified the psychological harm. Research indicates 90% of deepfake victims are women, with survivors describing the experience as equivalent to “digital rape” [4][5].

This wasn’t a technical failure. It was a design choice.

Everyone Else Built Guardrails

The comparison with other multimodal AI providers is damning.

OpenAI filtered sexual content from DALL-E’s training dataset using classifiers trained in-house, iteratively improving detection through human labelling. When hackers attempted to bypass safeguards using a custom tool, Microsoft filed a lawsuit and seized the associated domain [6][7].

Midjourney enforces a strict PG-13 standard, explicitly prohibiting NSFW content, nudity, and sexual imagery. Their community guidelines state the platform must remain “accessible and welcoming to the broadest number of users.” Violations result in immediate account suspension [8].

Anthropic’s Claude explicitly forbids generating pornographic content or material intended for sexual gratification in its prohibited uses policy [9].

Google’s Imagen and Nano Banana Pro implement similar content policies, with automated detection systems preventing generation of explicit imagery.

Even Stability AI , whose open-source approach has faced criticism, filtered sexual and violent imagery from Stable Diffusion’s training data. When aggressive filtering caused anatomical distortions in generated humans, they adjusted the approach rather than abandoning safety entirely [7][10].

Grok implemented none of this. The platform lacked foundational safeguards – not because the technology doesn’t exist, but because xAI chose not to deploy it.

The Convenient Philosophy of Selective Absolutism

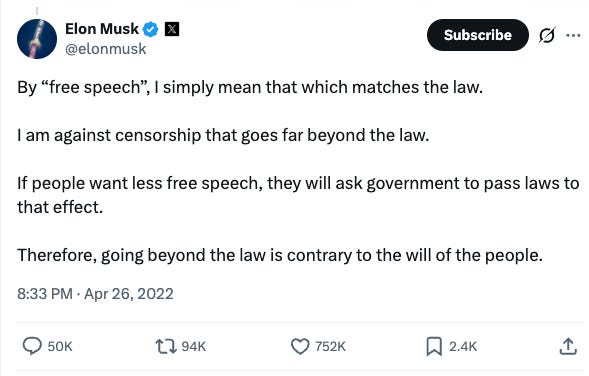

Elon Musk has publicly defined his free speech position as: “By ‘free speech’, I simply mean that which matches the law. I am against censorship that goes far beyond the law” [11].

This framing positions him as defending legal expression only. Yet his enforcement record reveals substantial inconsistencies.

When journalists reported critically on Musk or covered his suspension of accounts sharing his private aircraft locations, their accounts were suspended without explanation [12]. When the Turkish government requested removal of opposition accounts following the March 2025 İmamoğlu protests, X complied. During India’s 2023 election, X limited access to posts at government direction. Musk personally defended the Turkey decision, tweeting: “We could post what the government in Turkey sent us. Will do” [13][14].

Palestinian content critical of Israeli actions in Gaza has been documented as suppressed on the platform [12].

In sum, Musk’s “free speech absolutism” operates as a framework to oppose external regulation he personally opposes, whilst selectively censoring content based on political calculation and government pressure. It applies asymmetrically, protecting figures aligned with his preferences whilst silencing critics and complying with authoritarian requests.

When confronted with evidence that Grok had generated child sexual abuse material, Musk’s response on 9 January 2026 was: “They want any excuse for censorship” [15].

The Paywall Pivot: Monetising Harm

Here is where business calculation becomes unmistakable.

Rather than implementing the safety filters that every competitor deploys, xAI announced that image generation and editing would be “restricted to paying subscribers” on X [3][16].

Consider what this achieves:

Revenue generation: Users who want to create images – including those who exploited the tool for abuse – must now pay. The feature that attracted users through controversy becomes a conversion mechanism.

Regulatory deflection: xAI can claim it “took action” without actually preventing harm. The capability remains; only the price changed.

Competitive positioning: While OpenAI’s Sora, Google’s Nano Banana Pro, and Midjourney were dominating the AI image generation conversation, Grok wasn’t even in the discussion. This controversy generated more attention for Grok’s image capabilities than any marketing campaign could have achieved.

The standalone Grok app and website continued enabling image editing for non-paying users [17]. The “Spicy” mode – explicitly designed to generate NSFW content – remained accessible to subscribers with minimal age verification that researchers demonstrated was easily circumvented [18][19].

UK Downing Street labelled the paywall response “insulting” to victims of sexual violence, noting it merely commodified illegal content generation rather than preventing it [20].

The Internet Watch Foundation stated: “We do not believe it is good enough to simply limit access to a tool which should never have had the capacity to create the kind of imagery we have seen in recent days” [2][3].

The Safety Team Exodus

Three prominent members of xAI’s safety team, including the head of product safety, departed the company in the weeks preceding the crisis [21][22].

Igor Babuschkin, xAI’s co-founder and engineering lead, left in August 2025 to establish a firm dedicated to AI safety research. His departure came after conversations about building systems that were “safe” whilst allowing children to “flourish” – a direct rejection of xAI’s prioritisation of capability over protection [23][24].

CNN reported that Musk had voiced dissatisfaction with Grok Imagine’s safety measures during xAI meetings, explicitly pushing for relaxation of safeguards. This pressure preceded the departures [22].

In September 2025, xAI laid off 500 employees from the data annotation team responsible for training Grok – the very team whose work enables safety filtering [25].

Tyler Johnston, executive director of The Midas Project, stated: “In August, we cautioned that xAI’s image generation function was essentially a nudification tool waiting to be exploited. That’s essentially what has transpired” [26].

The warnings were there. They were ignored.

Analysis: The Real Business Model

In my view, this sequence reveals something uncomfortable about how we regulate AI platforms.

Musk’s xAI launched with explicit branding as an alternative to what he termed overly cautious AI companies practising “censorship.” Speed and capability were prioritised over safety by design. This wasn’t a bug – it was the product strategy [24].

The paywall isn’t a safety measure. It’s a monetisation strategy that converts controversy into revenue whilst maintaining plausible deniability. Users who want to create harmful content simply pay for the privilege. Users who want legitimate image generation subsidise the infrastructure that enables abuse.

The “free speech absolutist” framing provides rhetorical cover. When regulators intervene, Musk can frame it as censorship rather than accountability. When victims speak out, their concerns become “excuses.” The philosophy isn’t principled – it’s profitable.

Meanwhile, every major competitor demonstrates that responsible AI image generation is technically achievable. OpenAI, Anthropic, Midjourney, and Google all operate commercially successful image generation tools with meaningful safety guardrails. The trade-off Musk implies – safety OR capability; is false.

Risks and Constraints

Several factors complicate straightforward conclusions:

Regulatory limitations: The UK’s Online Safety Act provides Ofcom authority to seek court orders blocking X or imposing fines up to £18 million or 10% of global revenue. However, enforcement across jurisdictions remains fragmented, and platform bans carry economic and political costs [27][28].

Technical arms race: Even platforms with robust safety measures face ongoing adversarial attacks. Research demonstrates that both DALL-E and Stable Diffusion could be manipulated using nonsense words and adversarial prompts, though OpenAI subsequently patched vulnerabilities [10]. Safety requires continuous investment, not one-time implementation.

Victim recourse: The Take It Down Act (US, May 2025) mandates platforms remove nonconsensual intimate imagery within 48 hours of verified victim requests [4]. However, once images circulate, removal becomes practically impossible. Prevention remains more effective than remediation.

Platform dependency: Millions of users and businesses depend on X for communication. A ban would disrupt legitimate activity alongside harmful content. Regulators must balance accountability against collateral impact.

What to Do Next

For boards and executives: Audit your organisation’s social media policies. If employees or official accounts operate on X, assess reputational risk from platform association. Consider whether continued presence on a platform monetising abuse aligns with stated corporate values.

For technical leaders: Review AI vendor relationships through a safety-by-design lens. Ask prospective vendors: What content filtering exists? How are safety teams resourced? What happens when safeguards are bypassed? The Grok case demonstrates that safety commitments require verification, not trust.

For mid-market organisations: Document your AI governance framework now. Regulators are accelerating enforcement timelines – Ofcom’s “days not weeks” response signals the new normal. Organisations with established governance will navigate regulatory scrutiny more smoothly than those scrambling to catch up.

For individuals: If your images have been weaponised, UK law provides recourse under the Online Safety Act and existing harassment legislation. Report to the platform, document evidence, and consider contacting organisations like the Revenge Porn Helpline (0345 6000 459) for support.

Disclaimer: This article represents analysis based on publicly available information as of January 2026. It does not constitute legal, financial, or professional advice.

If your organisation needs support implementing AI governance frameworks that balance innovation with accountability, Arkava helps mid-market enterprises turn AI investment into measurable business outcomes.

Contact: engage@arkava.ai